Django ChatGPT Tutorial Series:

- Introduction

- Create Django Project with Modern Frontend Tooling

- Create Chat App

- Partial Form Submission With Turbo Frame

- Use Turbo Stream To Manipulate DOM Elements

- Send Turbo Stream Over Websocket

- Using OpenAI Streaming API With Celery

- Use Stimulus to Better Organize Javascript Code in Django

- Use Stimulus to Render Markdown and Highlight Code Block

- Use Stimulus to Improve UX of Message Form

- Source Code chatgpt-django-project

In this chapter, we will use Stimulus to improve the UX of the message form.

Form Submission Indicator

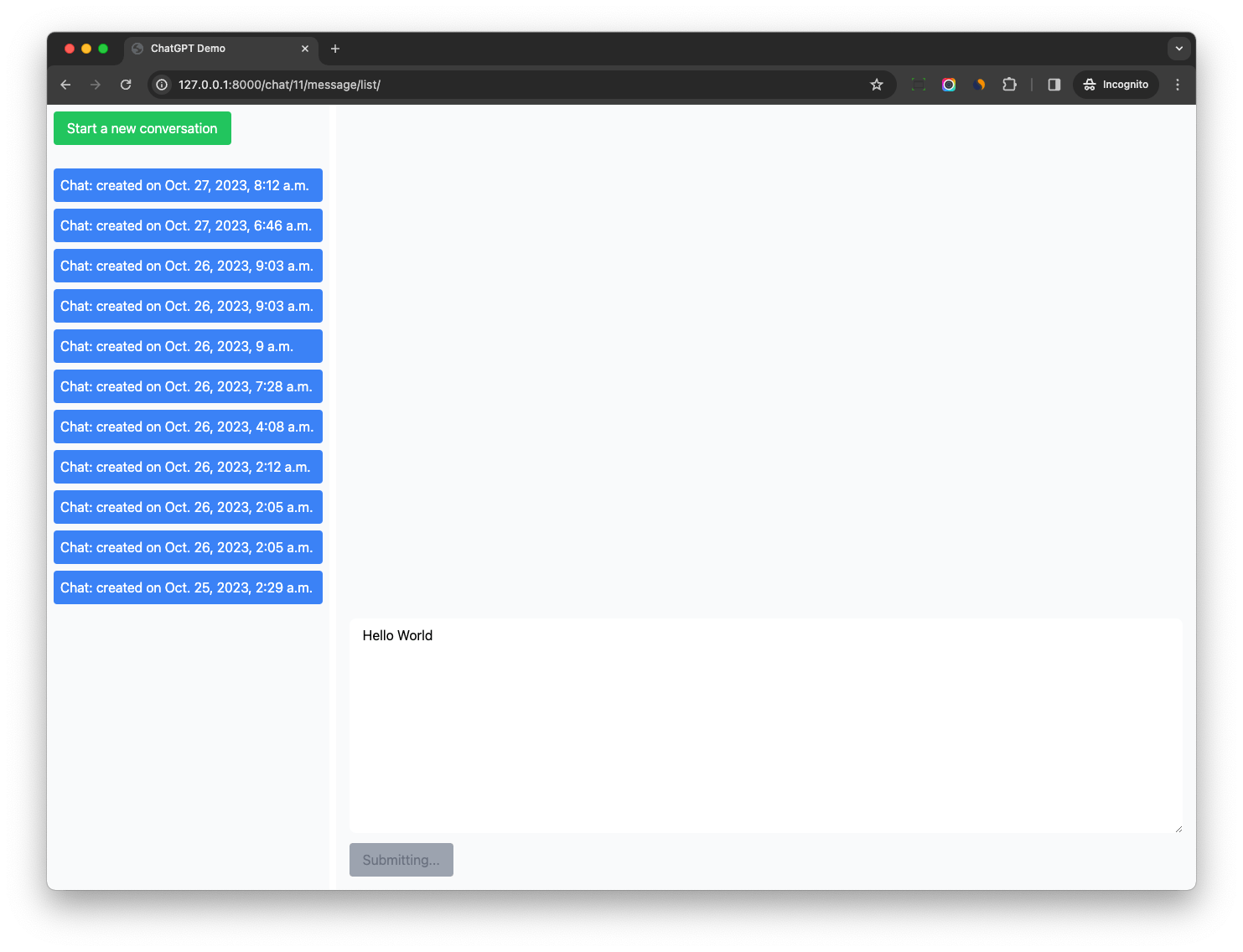

When user submit the form, we wish to change the button text to "Sending..." and disable the button.

You might think that we can do this with some Javascript, however, we can build this feature without writing Javascript.

Since our form is already wrapped by turbo-frame which has data-turbo="true", the form submission event is handled by Turbo, and we can use Turbo's data-turbo-submits-with to help us.

data-turbo-submits-withspecifies text to display when submitting a form. Can be used on input or button elements. While the form is submitting the text of the element will show the value of data-turbo-submits-with. After the submission, the original text will be restored. Useful for giving user feedback by showing a message like “Saving…” while an operation is in progress.

Update chatgpt_django_app/templates/message_create.html

<button type="submit"

class="transition-all bg-blue-500 hover:bg-blue-600 text-white py-2 px-4 rounded disabled:bg-gray-400 disabled:text-gray-500 disabled:cursor-not-allowed"

data-turbo-submits-with="Submitting...">

Submit

</button>Notes:

- We add

data-turbo-submits-with="Submitting..."to the submit button - We add

disabled:bg-gray-400 disabled:text-gray-500 disabled:cursor-not-allowedto change button style when the button is disabled during the form submission.

Auto Grow TextArea

If we need to wring multiple line in the input box, we can see the height of the input box do not increase, and we need to drag the bottom right corner to increase the height, this is not good and let's fix it.

There are already some good libraries to help us, and we do not need to reinvent the wheel.

$ npm install stimulus-textarea-autogrowUpdate frontend/src/application/app.js

// This is the scss entry file

import "../styles/index.scss";

import "@hotwired/turbo";

import { Application } from "@hotwired/stimulus";

import { definitionsFromContext } from "@hotwired/stimulus-webpack-helpers";

import TextareaAutogrow from 'stimulus-textarea-autogrow'; // new

window.Stimulus = Application.start();

const context = require.context("../controllers", true, /\.js$/);

window.Stimulus.load(definitionsFromContext(context));

window.Stimulus.register('textarea-autogrow', TextareaAutogrow); // newWe import TextareaAutogrow from the stimulus-textarea-autogrow and register it with Stimulus.

Update chatgpt_django_app/chat/forms.py

class MessageForm(forms.ModelForm):

content = forms.CharField(

label='Content',

widget=forms.Textarea(

attrs={

'class': "rounded-lg border-gray-300 block leading-normal border px-4 text-gray-700 bg-white "

"focus:outline-none py-2 appearance-none w-full",

'data-controller': "textarea-autogrow", # new

},

),

validators=[MinLengthValidator(2)]

)Notes:

- We add

data-controller': "textarea-autogrow"to the textarea element, then Stimulus attach the controller instance to the element and make auto grow work.

Now restart webpack and test in your browser, the height of the textarea will increase automatically.

Ctrl + Enter to Submit

Create frontend/src/controllers/chatgpt_message_form_controller.js

import { Controller } from "@hotwired/stimulus";

export default class extends Controller {

connect() {

this.focus();

}

focus() {

// autofocus, so it can work after form reset by turbo stream

const form = this.element;

const textarea = form.querySelector("textarea");

if (textarea) {

textarea.focus();

}

}

autoSubmit(e) {

if (!(e.key === "Enter" && (e.metaKey || e.ctrlKey))) return;

const form = e.target.form;

if (form) form.requestSubmit();

}

scroll(event) {

if (event.target.tagName === "TEXTAREA") {

const target = document.querySelector(`#${this.targetValue}`);

target.parentElement.scrollTop = target.parentElement.scrollHeight;

}

}

}Notes:

- We create a Stimulus controller for the message form.

- In the

connectmethod, focus on the textarea element. this can make sure user can always input after getting response from OpenAI, without using mouse to click on the textarea.

Update chatgpt_django_app/templates/message_create.html

<form

method="post"

action="{% url 'chat:message-create' view.kwargs.chat_pk %}"

class="p-2 m-2"

data-controller="chatgpt-message-form"

data-action="keydown.ctrl+enter->chatgpt-message-form#autoSubmit focusin->chatgpt-message-form#scroll"

data-chatgpt-message-form-target-value="chat-{{ view.kwargs.chat_pk }}-message-list"

>

</form>Notes:

keydown.ctrl+enter->chatgpt-message-form#autoSubmitmeans if user clickCtrl + Enterin the textarea, theautoSubmitmethod in thechatgpt-message-formcontroller will be called and submit the form automatically.focusin->chatgpt-message-form#scrollmeans if textarea receive focus, thescrollmethod in thechatgpt-message-formcontroller will be called and scroll the message list to the bottom.data-chatgpt-message-form-target-valueis to pass the message list DOM element id to the controller to make the controller reusable, the value can be read in Stimulus controller bythis.targetValue.

Auto Scroll

Next, we will make the message list auto scroll to the bottom when new message is appended.

Update chatgpt_django_app/chat/receiver.py

@receiver(post_save, sender=Message)

def handle_user_message(sender, instance, created, **kwargs):

html = (

TurboStream(f"chat-{instance.chat.pk}-message-list")

.append.template(

"message_item.html",

{

"instance": instance,

"turbo_stream": True, # new

},

)

.render()

)

channel_layer = get_channel_layer()

group_name = f"turbo_stream.chat_{instance.chat.pk}"

async_to_sync(channel_layer.group_send)(

group_name, {"type": "html_message", "html": html}

)

if created and instance.role == Message.USER:

message_instance = Message.objects.create(

role=Message.ASSISTANT,

content="Thinking...",

chat=instance.chat,

)

# call openai chat task in Celery worker

on_commit(lambda: task_ai_chat.delay(message_instance.pk))Notes:

- We add

turbo_stream=Trueto the context, so the template can know if it will be used in Turbo Stream or normal page.

Update chatgpt_django_app/templates/message_item.html

<div id="message_{{ instance.pk }}">

{% if instance.role_label == 'User' %}

<div class="p-4 m-4 max-w-full text-black rounded-lg bg-sky-100 prose"

data-controller="markdown"

data-content="{{ instance.content|escape }}"

data-markdown-target-value="chat-{{ instance.chat_id }}-message-list"

data-turbo-stream="{{ turbo_stream|yesno:"true,false" }}">

</div>

{% else %}

<div class="p-4 m-4 max-w-full bg-gray-200 rounded-lg prose"

data-controller="markdown"

data-content="{{ instance.content|escape }}"

data-markdown-target-value="chat-{{ instance.chat_id }}-message-list"

data-turbo-stream="{{ turbo_stream|yesno:"true,false" }}">

</div>

{% endif %}

</div>Notes:

data-turbo-stream="{{ turbo_stream|yesno:"true,false" }}"will be used in themarkdowncontroller to decide if the element come from Turbo Stream or not.

Update frontend/src/controllers/markdown_controller.js

import { Controller } from "@hotwired/stimulus";

import { marked } from "marked";

import hljs from "highlight.js";

import "highlight.js/styles/tomorrow-night-blue.css";

export default class extends Controller {

static values = {

target: String,

};

connect() {

this.parse();

if (this.element.dataset.turboStream === 'true') {

// if message come from turbo stream, auto scroll to bottom to display it

this.scroll();

}

}

parse() {

const renderer = new marked.Renderer();

renderer.code = function (code, language) {

const validLanguage = hljs.getLanguage(language) ? language : "plaintext";

const highlightedCode = hljs.highlight(code, {

language: validLanguage,

}).value;

return `<pre><code class="hljs ${validLanguage}">${highlightedCode}</code></pre>`;

};

const html = marked.parse(this.element.dataset.content, { renderer });

this.element.innerHTML = html;

}

scroll() {

const target = document.querySelector(`#${this.targetValue}`);

console.log(target.parentElement.scrollHeight);

target.parentElement.scrollTop = target.parentElement.scrollHeight;

}

}Notes:

- After

parsethe markdown content, we check if the message come from Turbo Stream, if yes, we scroll the message list to the bottom.

Now if we send a message, the message list will auto scroll to the bottom while getting response from OpenAI.

Conclusion

In this article, we learned how to improve UI/UX by levering Turbo and other npm package such as stimulus-textarea-autogrow, all frontend code we wrote are placed in below three Stimulus controllers

chatgpt_message_form_controller.js

markdown_controller.js

websocket_controller.jsIf you want to dive deeper into Turbo and Stimulus, please check my book The Definitive Guide to Hotwire and Django (2.0.0)

Django ChatGPT Tutorial Series:

- Introduction

- Create Django Project with Modern Frontend Tooling

- Create Chat App

- Partial Form Submission With Turbo Frame

- Use Turbo Stream To Manipulate DOM Elements

- Send Turbo Stream Over Websocket

- Using OpenAI Streaming API With Celery

- Use Stimulus to Better Organize Javascript Code in Django

- Use Stimulus to Render Markdown and Highlight Code Block

- Use Stimulus to Improve UX of Message Form

- Source Code chatgpt-django-project